…as perceived by a human; this blog was written entirely by the author, and not by a language model. This is part of a series of posts giving you a complete primer on transformers, LLMs, the GPT family of models and beyond:

- Part 1: What are transformer models and LLMs? (this article)

- Part 2: How ChatGPT, GPT-4 and beyond came to be (coming soon…)

- Part 3: Everything you need to know about GPT-4

At Genie we’ve been experimenting with generative models for the last 5 years. When we started out, we used GANs - which was the subject of my MSc Machine Learning thesis. These models were difficult to train and were often unstable, because they rely on reinforcement learning techniques to pass a discrete gradient between two neural nets. Woah there! Too much terminology? Let’s back track and hit some easy definitions…

Machine learning definitions

- Machine learning: any mathematical model that learns from data

- Artificial intelligence: the marketing term for machine learning 🙊

- Generative model: any model that learns the distribution of P(X,Y), in comparison to discriminative models which tell you P(X|Y).

- In English: generative models give you the entire distribution of input and output pairs, rather than just predict an output given an input. This means you can do more with them, such as generate entire sentences rather than just predict the next token!

- Language model (LM): a model that determines the probability of a given sequence of words occurring in a sentence

- Large scale language model (LLM): an LM with a massive amount of parameters, typically more than 1 billion.

- GPT-3: An LLM with 175 billion parameters, with a similar architecture to GPT-2.

- ChatGPT: An LLM with 1.3 billion parameters, improved through human-labelled data and reinforcement learning to align more with human instructions.

Why machine learning became so popular in the last 10 years

The present decade of machine learning has come into the fore due to increasing compute power (á la Moore’s law) making large scale neural nets computationally tractable. It’s worth noting that neural networks, however, were first proposed as early as 1944.

The problem with simple neural nets, no matter how big you make them, is they are based on many layers of linear regression stacked upon each other, and later layers are likely to “forget” information (passed through by differentiation i.e. gradients) learned from earlier layers - especially if you have millions, and often billions of parameters!

Solving catastrophic forgetting

As a result, models such as recurrent neural nets (RNNs) became popular (although once again this was first conceptualized in 1986 by David Rumelhart et al). The “recurrent” part of RNNs have a mechanism for folding the gradient of previous timesteps into the current timestep, resulting in less “catastrophic forgetting”. By that I mean they use a sequence of hidden states ht, as a function of the previous hidden state ht-1 and the input for position t.

In the context of textual data, each timestep would mean one token (essentially a character) of text. So this meant the models could learn about much longer lengths of text data and still produce accurate results. RNNs still had their limits though - this sequential folding of previous timesteps into the current hidden state ht makes it difficult to parallelize training across the training data, despite various remedies being attempted such as factorization tricks.

Introducing transformer models

In 2017, everything changed.

Vaswani et al published the (I would say legendary) paper “Attention Is All You Need”, which used a novel architecture that they called the “Transformer”.

The idea for the transformer wasn’t totally new, however, because it was (and still is) based on the self-attention mechanism that the best performing RNNs and CNNs (another type of neural network often used in image classification) were using. Vaswani et al observed that if you retain this self-attention mechanism, but drop the RNNs and CNNs, you still get high performance but with much better parallelization and less training time.

In this sense, transformers can be seen as somewhat of a simplification on previous state of the art - not a complication.

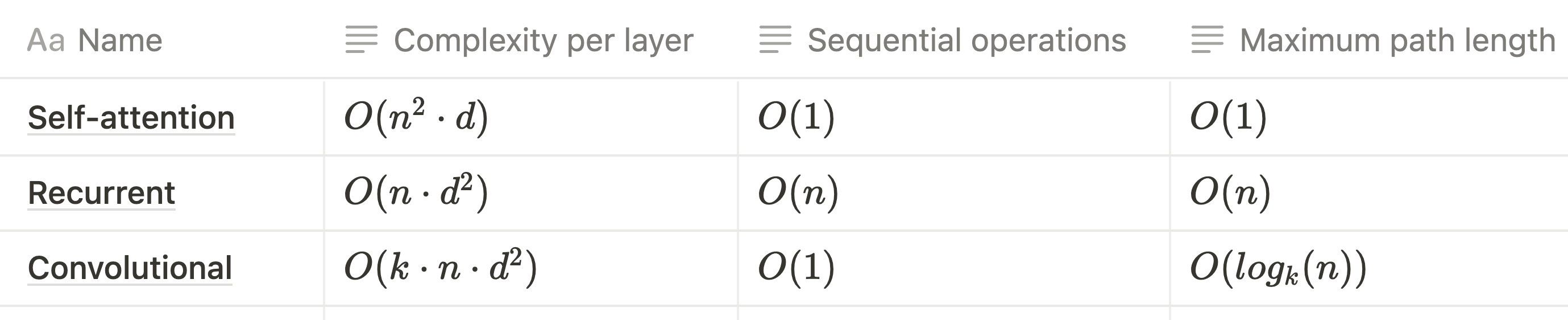

The self-attention mechanism of transformers provides 3 main benefits:

- Lower computational complexity per layer (if n < d, i.e. the number of data points is smaller than the dimensionality of the data)

- More parallelizable computation (measured by the minimum number of required sequential operations)

- Decreased path length between long-range dependencies in the network, thereby decreasing the likelihood of catastrophic forgetting

The below table shows the complexity of transformers against RNNs and CNNs for reference:

One potential downside to note here is that since transformers move the layer complexity to a function of n rather than d, they do tend to have limited sequence lengths i.e. operate across a limited text length. This is why there is a limit on the amount of text (tokens) you can input into the GPT models.

Nonetheless, this dramatic decrease in training time and costs meant that companies (with lots of cash to burn) like OpenAI, Google and Meta where able to create gigantic models with billions of parameters in order to increase accuracy across a wide variety of tasks.

What are LLMs?

Large scale language models or LLMs are simply language models on a large scale (typically > 1 billion parameters, although such ideas of norms will vary over time). A language model is any model that provides a probability distribution over a sequence of words - that is, the likelihood of each word or set of words occurring within the sentence. In mathematical parlance, if the sentence contains n words, the model simply defines P(w1, …, wn).

This is useful because models can then perform a range of tasks, such as predicting the next word in the sentence P(wt | wt-1. In terms of what sort of tasks are possible, there is a difference between generative and discriminative models, the former being more flexible, however, this will be the subject of a future blog post. In another sense, flexibility is also a critical factor in why transformers have become so popular, which is covered in the section below.

Flexibility and AGI

Massive models (and performance metrics generally seemed to scale linearly with number of parameters) yielded greater performance, however greater accuracy is only a part of the story as to why transformers became so popular.

In my view the more important feature of these models are that they are autoregressive, sequence-to-sequence (seq2seq) models.

What does autoregressive, seq2seq mean?

Autoregressive simply means predicting future timesteps based on past timesteps. Autoregressive models have historically (see the pun there) been used in financial forecasting, among other areas. In the NLP domain it’s a useful way to predict future tokens or words in a sentence, for example.

Seq2seq simply means we take one input sequence and generate an output sequence. We refer to them as sequences because often this is discrete data, such as characters or tokens in a sentence. This means we have to use methods like word embeddings to convert each token into a vector of numbers in order for the algorithm to process them.

However, the key point is that if you are taking any arbitrary sequence of inputs, and producing an arbitrary sequence of outputs, this is a highly flexible approach because sequences can represent many real world problems.

As discussed, it could be an input sentence of tokens and an output sentence. It could be rows of pixels in an image, or bytes of sound in speech.

Turns out, “sequences” can both represent data cross time (such as speech) and other data layouts quite well, which to me at least, is quite philosophically interesting.

Practically speaking, this even means you can perform classification tasks such as: predicting the sentiment between 5 different options of a tweet, simply by asking a question and expecting an answer, because the question and possible output classes can simply form an input sequence of characters (tokens), and the output can also be a text sequence.

This is game changing, because one model can do many, many tasks, which is what we might consider as a colloquial definition of “artificial general intelligence”, or AGI.

In the next blog post in this series, I will explain how we got from transformers to chatGPT, GPT4 and beyond.

If you want to skip to post 3 in this series - that's 'GPT-4 - What's New - everything you need to know'.

For now it’s worth noting that this movement towards ever more flexible models and AGI presents a huge opportunity to replicate knowledge work like legal assistance.

At Genie we are developing proprietary models trained on private datasets to create the best performing AI legal assistant or AI lawyer in the market.

For early access to our AI Lawyer sign up here.

.png)