.png)

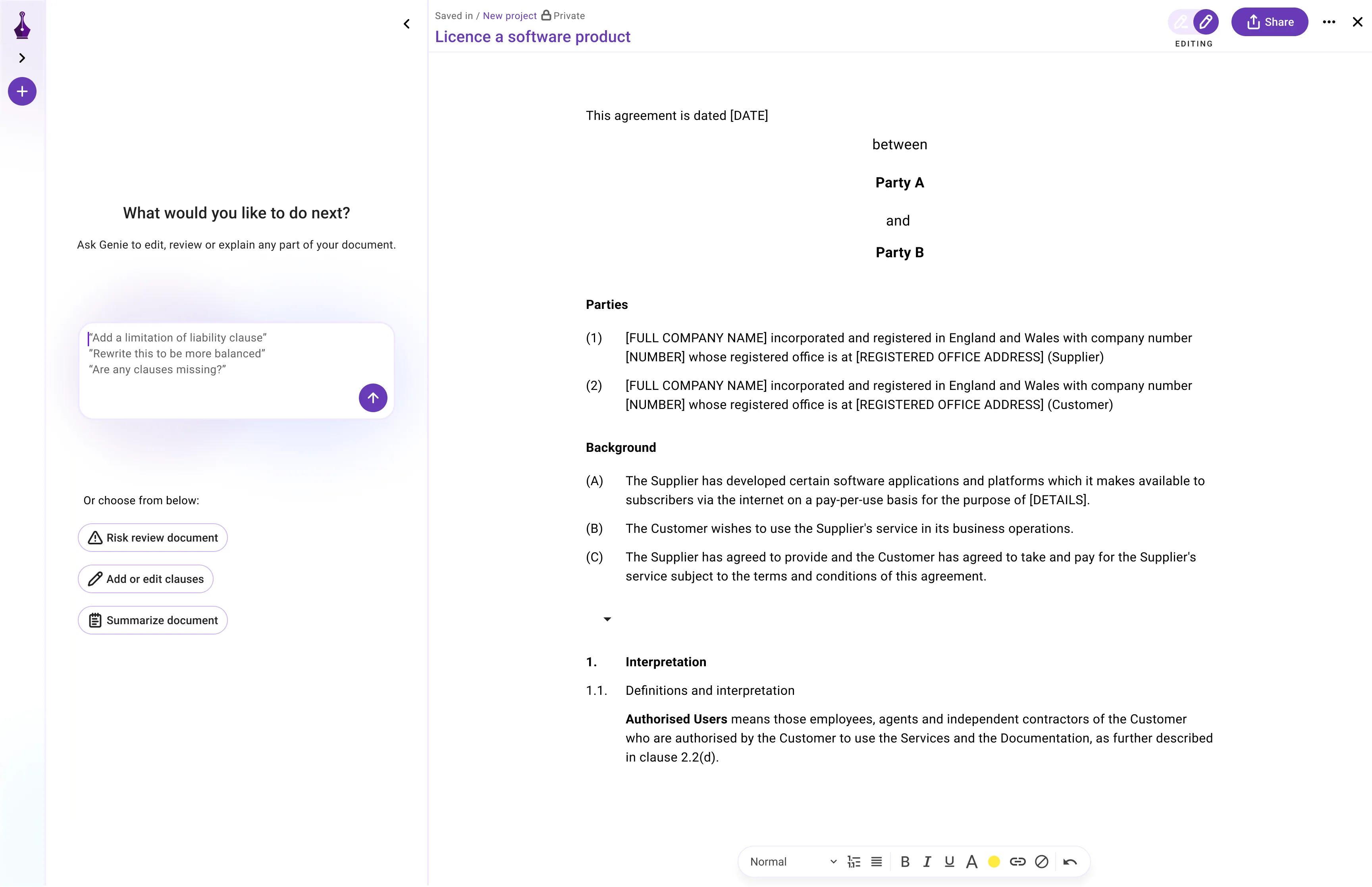

I had the pleasure of sitting down with Shauli Jacks from SafetyDetectives to discuss my role as Growth Marketing Lead at Genie AI. We delved into a broad range of topics — from the genesis of Genie AI to its innovative role in the legal tech industry. Together we unraveled the ethical considerations of AI implementation in the legal sector while shedding light on the challenges posed by the complex nature of legal language.

My Background and Current Role

Shauli, thank you for having me. As for my background, I've always had a knack for founding businesses that aim to help other business owners concentrate on their passion — providing high-quality goods or services to their clients. It shifts their focus from spending most of their time on demanding administrative tasks that bring them little joy.

I joined Genie AI in June 2021 with the goal of growing the business. Since then, our growth rate is up 500-fold.

The founding of Genie AI

Our vision for Genie AI was born when co-founders Rafie and Nitish were pursuing their MSc in Machine Learning at UCL. They discovered:

- They found that generative algorithms worked brilliantly with text-based structured professional services such as legal documents.

- They found that law firms they were working with extensively used templates, but these templates weren’t made available to everyone without a high cost.

- They found that many of their friends in law were horribly overworked, doing repetitive or low-value low-risk tasks which could be freed up.

Our ultimate aim is to create a legal assistant that performs like a team member, completing tasks at 100x the speed, while using more knowledge than a single individual human could possibly hold.

Ensuring the Accuracy of Legal Documents

Well first-off, it’s not just Genie AI who believe that GenAI can help – A 2023 Thomson Reuters survey found that 82% of law firms believe GenAI can be efficiently applied to legal work.

Secondly, we carry out extensive testing with our world-class legal advisors, and we input relevant legal and commercial context (some of which is user-specific) to ensure that users receive the most accurate responses possible.

Third, We already know from research that GPT-4 scored 290+ on the bar exam which places it in the top 10% of human test takers. What’s more – we know it has incredibly generalised knowledge, meaning that it’s not skewed by specialisms that career lawyers have picked up. This makes it more unbiased, objective and accurate than many lawyers and law firms available to small businesses.

Fourth, We experiment a lot with prompts and different models, and work on these as a team. Good prompts in our domain need some prompt engineering know-how, but also extensive legal expertise that needs to be distilled into the prompt, including crucially which context is relevant for a given task. Again, more relevant for the next releases we’ll be deploying soon (like AI RAG Review, and a feature that allows the user to create any type of market-standard document from scratch)

We haven’t, however, seen any data suggesting poor quality output is being generated. Users love it. Lawyers love it. It should be treated with due caution as all new technologies should, of course. That being said, we know it is only going to get better and better. Given that GPT-4 was 10x better at passing the bar exam than GPT-3, we’ll have an AI Lawyer which is generally better than the top 10% of human lawyers within a couple of years I’d say. That’s just absolutely incredible, isn’t it?

82% of law firms believe GenAI can be efficiently applied to legal work.

Genie AI and Confidentiality

Yeah this is a great question, we are doing a lot to combat the general ‘feeling’ of concern around trusting where the input data goes.

Our input data currently flows through us to either Open AI (ChatGPT/GPT-4) or Anthropic (Claude 2).

We know Microsoft and OpenAI are partners – and people trust Microsoft with the most sensitive of documents. This same logic applies to OpenAI partnerships with Google, Atlassian (owner of JIRA) and Salesforce.

Similarly Anthropic are partners with both Google and Microsoft, as well as Zoom. These are the leading technology companies of our age, partnering with the same AI platforms we use, and using the same models that we use.

Add to that the fact that no member of the team at Genie can view your documents, and we use bank-grade at rest and in-transit encryption, are ISO27001 certified and we undergo regular external audits. We know that legal data is about as confidential as it gets – and yet people email it to one another and make human-errors we’re all prone to (such as accidentally sending it to the wrong person).

We are safer than email and more real-time collaborative than Office 365 (e.g. there’s no need to send different versions back and forth, or have a version just for internal purposes before sending over ready for the client to review).

Using Genie AI to draft and edit your documents minimises the chance of human error, and gives you more control over access and permissions, and in future (sneak preview here) will allow you to agree documents clause by clause. It’s the next generation of document editor. MS Word was never built for editing or negotiating legal documents, that’s exactly what Genie AI’s SuperDrafter Editor is built for though!

Genie AI's SuperDrafter editor is safer than email and provides more granular access levels and permissions than MS Word (which isn't built for legal work)

Ethical Considerations of AI in the Legal Sector

As with any truly disruptive technologies, there are definitely ethical considerations. With the UK’s AI Safety Summit coming up it’s clearly on everyone’s mind.

Specifically in legal – I think the number one caveat for the end-user is to double-check the AI’s output. AI should be a helpful and honest assistant, but it may sometimes be subtly wrong, and you need to be the arbiter of what’s right and wrong.

You hear a lot of academics saying that LLMs and these AI models are just predictors of the next word or sentence (and Rafie has written a great series of articles on these topics) but the interpretation of the general population is that it’s better than that. Cleverer than that.

Our job as creators in this space is to provide that feeling of magic (we are Genie after all), while mitigating and minimising any errors through the way in which that magic is produced.

One ethical dilemma we face therefore is how to weigh up delighting our users with cautioning them. We want our users to not have to use a lawyer, but we also don’t want them to miss if the AI has generated an error. We don’t want them blindly believing that AI is 100% accurate all of the time (no human is, after all).

The number one caveat for the end-user is to double-check the AI’s output

Overcoming Challenges in Legal Language Interpretation

Now this is an area where AI excels. We have seen AI work fantastically well in transcription, case retrieval, language simplification, language review, and other structured tasks. As the models of the day are attention-based (i.e. transformer models), the trick is for us to help the models to focus attention in the relevant area when generating a response.

Different methods of prompting have helped us here, such as utilising chain-of-thought, prompting the model to reflect on the task requested based on the specific user’s input, and to re-evaluate how it approaches the task (and therefore what it outputs).

.png)

.png)