Introduction

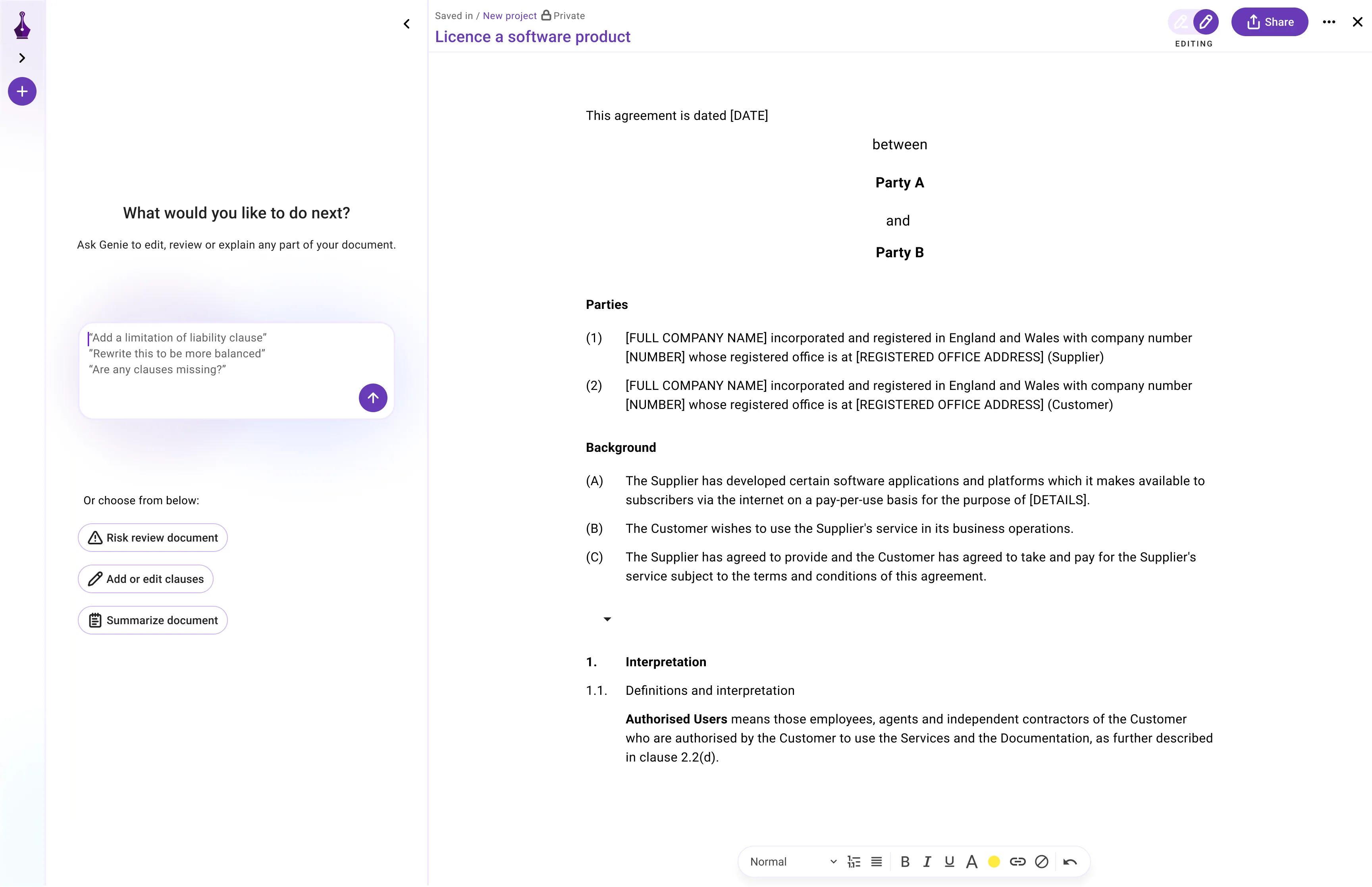

At GenieAI, we have been dedicating ourselves to refining our product's user interface. Our relentless pursuit of improvement led us to explore new design approaches and leverage cutting-edge technologies to unlock new possibilities for our users. One particular area of focus has been the development of a real-time collaborative feature within our text editor. In this blog post, we will delve into our decision-making process, the challenges we encountered, and the engineering solutions we implemented to bring this feature to life.

The Initial Decision:

When we embarked on the journey of creating our text editor some years ago, we made a conscious choice not to support real-time collaboration. Two key reasons influenced this decision:

- Complexity Concerns: We believed that introducing a real-time collaborative feature could potentially complicate the architecture without significantly enhancing the user experience.

- User Experience Considerations: We also took into account our users' preferences and concerns. The notion of simultaneous edits to a legal contract, for instance, might make it arduous for parties involved to track and review all the changes made in real time.

However, we pondered upon a pivotal question: What if we could implement a real-time collaborative feature that effectively tracked document changes? This would allow us to introduce the benefits of real-time collaboration while eliminating the conventional process of offline document sharing and manual merging of changes upon its return.

Motivated by this tremendous opportunity, our design team commenced work on providing the optimal user experience in a multi-party collaborative setting. Simultaneously, our engineers delved into the technical implementation required to turn this vision into reality.

In this article, we will shed light on how we tackled the hurdles encountered throughout the implementation process. We will discuss the specific technologies employed and delve into the major challenges we faced, providing you with an insight into the intricacies of bringing real-time collaboration to life.

The technical problem

Our text editor harnesses the power of ProseMirror, a popular and robust framework tailored for building custom text editors. This framework impressively emits events in real-time whenever a change is made to the document, enabling us to transmit only the specific changes to the network. However, a potential conflict arises when two users concurrently modify the same part of the document. Fortunately, ProseMirror comes equipped with a built-in "conflict resolution" mechanism, deftly resolving conflicts and merging changes.

While ProseMirror's native support for real-time collaboration simplifies our development process, it solely addresses the client-side infrastructure, leaving us with several challenges to tackle on the server-side:

- Data Exchange: How do we efficiently exchange data between users connected to the same document?

- Security Implementation: How can we establish a robust security system that restricts data exchange to authorized users for specific documents?

- Change Storage: How do we effectively store changes in a real-time collaborative environment?

- Latency Management: How can we ensure acceptable latency levels between users?

- Testability: How do we engineer our code to be easily testable with comprehensive end-to-end (E2E) tests?

- Flexibility and Scalability: How can we design our codebase to be flexible and scalable as our user base grows?

- Development Roadmap: How do we strategically plan our development roadmap to address evolving needs and enhancements?

- Risk Assessment: What potential risks and challenges lie ahead as we progress with our real-time collaboration implementation?

In the upcoming sections, we'll delve into each of these server-side considerations, offering insights into the solutions we've crafted and the strategies we've adopted to tackle these challenges head-on.

Exchange the data - WebSocket vs Server-Sent Event

The initial challenge we tackled was establishing effective communication between the frontend and backend of our application. Specifically, we needed a seamless way to transmit document changes among connected users.

Our requirements were crystal clear. We sought a method to exchange data swiftly between the frontend and backend, ensuring prompt updates to the Document. Adopting a generic HTTP approach seemed suboptimal as it risked compromising the user experience due to potential delays. Additionally, relying on periodic endpoint calls would result in sluggishness. We needed a solution that was inherently "faster."

Through thorough research, we narrowed down our options to two potential technologies: WebSocket and Server-sent events (SSE).

Upon closer examination, WebSocket appeared unnecessary for our use case. It appeared more suited for applications akin to collaborative whiteboards, such as Figma or Miro, where low communication latency is of utmost importance. Furthermore, WebSocket implementation required infrastructure-level modifications involving the TCP/IP channel—an unnecessary complexity for our infrastructure. Considering that typing activity within our text editor typically occurs at a slower pace compared to the rapid mouse movements in whiteboard applications, WebSocket did not offer significant value in our context.

Consequently, we turned our attention to SSE as a viable alternative approach. SSE presented itself as a promising candidate for enabling real-time communication and fulfilling our requirements.

At its core, Server-sent events (SSE) provide a "sort of real-time" communication channel between the server and client by utilizing the standard HTTP protocol.

Wait, didn't we mention that HTTP was slow? Let me explain further.In contrast to a typical HTTP GET operation, SSE facilitates persistent communication between the client and server. This means that as soon as a new message becomes available on the server, it can be broadcasted to all connected clients.

It's important to note that SSE communication is unidirectional, allowing the server to push messages to clients while preventing clients from pushing messages to the server.

We didn't perceive this as a limitation since a client can still send a message to the server using an HTTP POST request, which can then be broadcasted to other clients connected to the same document in near real-time.

Considering these factors, we recognized several potential benefits of SSE and decided to conduct experiments to assess its functionality. Here's a summary of our findings:

- Significantly faster than a standard HTTP GET operation due to the server's ability to push messages through a persistent TCP/IP channel.

- No upfront infrastructure investments or modifications required since SSE is built on top of HTTP.

- More scalable and easier to maintain compared to WebSocket.

- Proven reliability and widespread adoption in numerous companies, making it a production-ready solution.

Considering these advantages, we confidently opted to utilize SSE for our real-time communication needs.

GraphQL Subscription, yes or no?

Having decided on SSE as our communication approach, we faced the question of how to implement it since SSE is merely a specification and not a ready-to-use library.

During our search, we came across different categories of SSE libraries:

- SSE libraries for web servers.

- SSE libraries designed specifically for GraphQL, known as Subscriptions.

Given our existing usage of GraphQL, we were intrigued to explore the potential benefits offered by GraphQL Subscriptions.

So, what exactly is a GraphQL Subscription? In its simplest form, it represents a long-lasting read operation that notifies subscribers whenever a value changes. This appeared to be a perfect fit for our requirements: when the document changes, we wanted to send those changes to clients who had subscribed to that particular document. It sounded highly promising!

By leveraging GraphQL Subscriptions, we could harness the power of real-time updates and seamlessly integrate them into our existing GraphQL infrastructure. This discovery propelled us further into exploring the advantages and capabilities of GraphQL Subscriptions for our SSE implementation.

.png)

An additional advantage offered by GraphQL Subscriptions is the ability to define them as part of a regular .gql file within a GraphQL Schema. This feature proved to be a significant selling point for us since it allowed our gql-codegen to generate TypeScript types and provide readily usable Next.js code for consumption.

During our exploration, we came across two GraphQL Subscription libraries that were compatible with Apollo Server:

- GQL Subscription over WebSocket (https://github.com/enisdenjo/graphql-ws)

- GQL Subscription over SSE (https://github.com/enisdenjo/graphql-sse)

Considering our positive experience with SSE, we decided to test the waters with GraphQL Subscription over SSE. Following a series of tests to ensure its feasibility, we proceeded with the implementation of both the backend and frontend components.

Security

Ensuring the security of our data has always been a top priority for us. When it comes to GraphQL, a challenge arises with the exposure of public subscriptions, where clients can receive changes for any document. However, it becomes crucial to verify the authorization of clients to access such data.

Fortunately, GQL SSE offers a solution by allowing us to manage custom authentication. This can be achieved by implementing an authentication check as part of the authenticate handler.

Within this handler, we have the opportunity to authenticate the client and issue a token that can be utilized if the client wishes to reconnect in the future. This mechanism empowers us to enforce authentication protocols and maintain data security throughout the subscription process:

const authenticate = async (req) => {

return await authenticateClient(req)

}

const context = (req, args) => {

return getDynamicContext(req, args)

}

That said, while the user may be authenticated, it is crucial to determine if they are authorized to access a specific resource.

To address this, we can incorporate the authorization check within the subscribe handler. Here, we can verify if the user has permission to retrieve the specified resourceID passed in the args.

const documentStepInserted = {

subscribe: async (rootValue, args: DocumentStepInsertedArgs, gContext: GContext) => {

const check = await stubCommunity.securityCheckDocument(args, gContext)

if (!check.result) {

throw errorFactory('not_own_error', 'documentStep')

}

return withFilter(

() => {

return pubsubReceiver.asyncIterator('SUBSCRIPTION_DOCUMENTSTEP_INSERTED')

},

async (payload: SGDocument) => {

return args.input.documentID.equals(payload.documentID)

}

)(rootValue, args, gContext)

}

}

If not authorised, throw errorFactory().

If authorised, create a filter withFilter().

Allow me to explain the functionality of the `withFilter()` function. When a document is updated, we trigger an event in Redis in the format of `{ documentID, value }`. The `withFilter` function plays a crucial role in filtering these events based on the requested `documentID` specified by the user.

To provide a more detailed breakdown:

1. We perform an authorization check by invoking `securityCheckDocument` to ensure that the user has the necessary permissions.

2. We initialize `withFilter` to retrieve only the document corresponding to the `documentID` provided in the `args`.

3. GraphQL uses SSE (Server-Sent Events) to deliver this value to the user.

4. And that's it – the process is complete.

With this code implementation, we have successfully managed the security aspect of our document flow. Redis is employed as a PubSub mechanism to notify subscribers when a specific resource undergoes changes.

However, you may wonder where the document itself is stored.

Data storage

Managing the storage layer for a real-time collaborative environment can be complex. Let's break it down into three key aspects:

1. Message forwarding: The backend receives messages containing the letters or words typed by users, which need to be promptly forwarded to other connected users. This ensures real-time synchronization of changes across all participants.

2. Document persistence: To prevent data loss, the backend must persist the document. This involves storing the document in a reliable and durable manner, ensuring that it can be retrieved even in the event of system failures or restarts.

3. Document retrieval: When a user joins the session at a later point, the backend needs to provide the entire document in a single call. This allows the user to access the complete state of the document and effectively participate in the collaborative session.

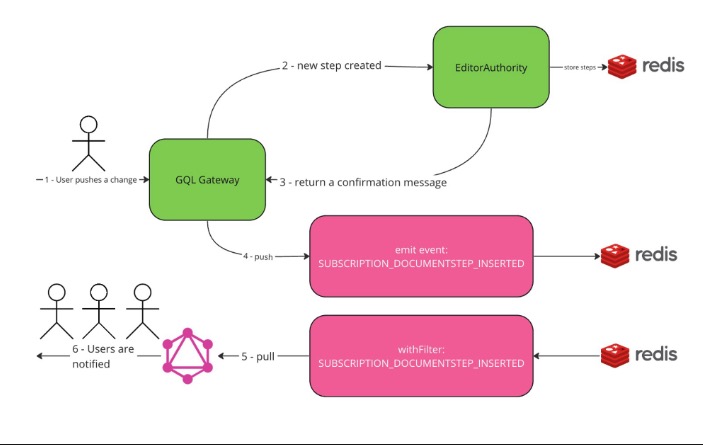

The diagram illustrates how our backend handles the storage layer, encompassing these essential functionalities. It showcases the flow of messages, document persistence, and the seamless retrieval of the complete document for new users joining the session.

Here's a breakdown of the steps involved in the storage layer management of our real-time collaborative environment:

1. The user initiates the process by pushing a message to the gateway using a GraphQL mutation.

2. The message is then forwarded to our `EditorAuthority` microservice, where it is merged with other document messages and stored in Redis, ensuring proper synchronization.

3. The `EditorAuthority` microservice acknowledges the successful storage of the message and sends a confirmation message back to the gateway, which is subsequently relayed to the user.

4. Simultaneously, the gateway publishes a message to the Redis PubSub channel named `SUBSCRIPTION_DOCUMENTSTEP_INSERTED`.

5. All Server-Sent Event (SSE) connections subscribed to the `SUBSCRIPTION_DOCUMENTSTEP_INSERTED` channel receive a notification. The `withFilter()` mechanism ensures that only relevant messages are pulled based on specified criteria.

6. Consequently, only specific users who meet the criteria receive the message through the SSE push mechanism, facilitating real-time collaboration.

By following this flow, our storage layer effectively handles message processing, storage, and distribution, ensuring efficient and secure collaboration among users in the real-time environment.

In addition to Redis, we also integrated Amazon S3 into our storage system. Let's explore the reasons behind its inclusion:

1. Handling user disconnections: In the event that all users disconnect from the real-time collaborative session, we have implemented a mechanism to transfer the document from Redis to Amazon S3. This process helps free up memory in Redis and ensures that the document remains securely stored in Amazon S3 for future access.

2. Mitigating accidental disconnections: In cases where users accidentally disconnect from the session, we have implemented an automation system. This system periodically scans Redis, identifies any pending document sessions, and automatically downloads and stores those documents in Amazon S3. This ensures that no data is lost due to accidental disconnections.

3. Resolving discrepancies in SSE disconnections: If a user becomes disconnected from the SSE and misses out on receiving 2-3 steps of document changes, our backend comes to the rescue. It retrieves the entire document from Redis, including the missed steps, and sends it back to the user. This ensures that the user remains up-to-date with the latest document state, even after experiencing disconnections.

By using Amazon S3 alongside Redis, we provide a robust storage solution that covers to different scenarios, including user disconnections, accidental disruptions, and SSE discrepancies. This ensures data integrity, resilience, and a seamless user experience in our real-time collaborative environment.

Latency

SSE proves to be exceptionally fast when compared to traditional HTTP GET operations. During our testing, particularly in collaboration with Merve, our frontend engineer, we observed the following timings:

- The initial SSE handshake operation takes approximately 50-60ms.

- Subsequent SSE push operations are completed in less than 15ms.

Interestingly, the majority of the time is consumed by GraphQL operations, specifically:

- Schema validation for the `args` transmitted by the client.

- Schema validation for the `response` delivered by the server.

While the Redis PubSub mechanism adds an additional 1-3ms delay due to network communication and message encoding/decoding, we explored the possibility of substituting Redis with an in-memory PubSub approach. However, we realised that such a change would introduce a level of risk, particularly if a microservice were to be accidentally restarted.

In summary, the efficiency of SSE is evident, with GraphQL accounting for the majority of the processing time due to schema validation. Our aim is to continually optimize performance and enhance the overall user experience within our real-time collaborative environment.

End-to-end tests

At Genie, we use Behavior-driven development (BDD) to test our backend systems. As we gained extensive experience in implementing numerous end-to-end (e2e) tests, a particular challenge arose when dealing with SSE connections, which are persistent by nature.

Fortunately, we discovered that the `graphql-sse` library offers a client specifically designed for listening to GraphQL Subscriptions. Here's a breakdown of how it works:

1. Create an instance of the `graphql-sse` client by providing the appropriate URL.

2. Supply the necessary GraphQL `variables` and schema.

3. Utilise the `next` handler to listen for incoming push operations.

This client enables us to effectively test scenarios involving SSE connections within our backend infrastructure. By leveraging this tool, we can ensure the reliability and functionality of our real-time collaborative environment through comprehensive testing procedures.

client.subscribe(

{ query, variables },

{

next: (result) => {

resolve(result.data)

client.dispose()

},

error: (e) => {

reject(error)

},

complete: () => {

resolve()

}

}

)

To ensure the reliability of our GQL Subscription implementation, we have conducted extensive stress testing within our test suite. One particular stress test involves sending 100 Document changes for various Documents and waiting for the corresponding SSE results to be received.

Through these stress tests, we have verified the robustness and dependability of our GQL Subscription functionality. This testing approach has given us confidence in the reliability of our SSE communication and its ability to handle a significant volume of Document changes seamlessly.

Flexibility and scalability

When comparing GraphQL Subscription SSE with other solutions, we have identified several advantages in terms of flexibility and scalability.

Flexibility:

- The GraphQL schema definition simplifies the implementation and maintenance of GQL Subscription. We can leverage existing GQL types, use TypeScript for enhanced type safety, and generate ready-to-use NextJS code for seamless consumption.

- Any changes made to the GQL Subscription definition automatically update the corresponding TypeScript types, making it easier for frontend developers to stay in sync.

- Authentication and authorization mechanisms are straightforward to implement and maintain within the GraphQL Subscription framework.

Scalability:

- SSE being HTTP-based allows us to scale the number of Gateway instances to handle higher workloads. This flexibility is demonstrated by companies like Uber, which successfully delivered millions of messages using SSE in their real-time push platform.

- Redis interoperability significantly reduces memory pressure and enhances scalability.

- Our security layer can be customized to accommodate different scenarios, ensuring scalability without compromising data protection.

While we are currently satisfied with GQL Subscription over SSE, we acknowledge that GraphQL Subscription over WebSocket offers distinct advantages. In the future, we may consider adopting WebSocket-based solutions for bi-directional communication, message delivery confirmation, and low-latency user experiences.

Process and methodology

As software engineers, we have taken a thoughtful approach to planning and executing our work, considering the importance of collaboration and efficient workflows. Here's an overview of how we have structured our work, but in few words we used an iterative approach, risk-driven development, with cross team communication and collaboration.

More details:

1. Research: We conducted thorough research and discussions to evaluate the pros and cons of SSE versus WebSocket for our use case. This allowed us to make informed decisions based on team feedback and considerations.

2. Tech debt & risk identification: We identified areas of tech debt and potential risks in our existing system. This included upgrading dependencies like Apollo GraphQL and RedisIO to ensure we were using the latest versions and addressing any compatibility issues. We tackled these tasks and successfully shipped them to production.

3. Prototype: We created prototypes and conducted a "client/server ping-pong demo" with our frontend engineer, Merve, to showcase the functionality and test GQL Subscription in action. We also worked with Mateo, another frontend engineer, to prototype ProseMirror document changes and conflict resolution. These prototypes were thoroughly tested and eventually deployed to production.

4. Project execution: We proceeded with the implementation of frontend-backend mutations and SSE events, ensuring that they functioned as expected. Along the way, we continuously reviewed the codebase to identify and eliminate any unnecessary complexity. We conducted internal rounds of manual testing to validate the system's behavior and performance. Additionally, we implemented stress tests to evaluate the system's robustness under high load conditions. Once we were satisfied with the results, we shipped the final implementation.

In addition to the iterative work process, we established a strong feedback loop within the team:

- We actively sought clarification and addressed any doubts or concerns that arose during the project. This allowed us to avoid premature scaling and mitigate risks.

- Communication between the Product Manager and engineers was crucial to understanding user priorities and aligning our work accordingly.

- Regular team sync activities facilitated effective collaboration and decision-making. We used a Miro board to visually represent different flows and collect feedback, enabling faster iterations and decision cycles.

We ensu

.png)

.png)